AI Risk Rules

AI Risk Rules help train Clarative's AI to automatically classify and prioritize risk events from your vendors. These rules enable intelligent filtering of incidents, negative news, regulatory filings, and other risk events based on your organization's specific risk tolerance and priorities.

Risk Classification Types

Clarative supports multiple risk classification levels to help you prioritize vendor risk events effectively:

- Critical Risk - Events that require immediate attention and escalation. These could be major security breaches, complete service outages, or incidents that could have severe business impact.

- High Risk - Events that cause significant operational disruption and need urgent review. These could be as major outages, data loss, critical system failures, or vendor bankruptcies.

- Medium Risk - Events that result in moderate impact to operations, such as data unavailability, processing delays, or availability issues that need timely attention.

- Low Risk - Events that cause minor service disruptions or have limited operational impact, such as temporary slowdowns or non-critical issues.

- No Risk - Events that don't have a material impact on your organization, such as general news, stock speculation, or other information without specific vendor risk implications.

Event Types Supported

AI Risk Rules can be configured for different types of risk events:

- Incidents - Service disruptions, outages, and operational issues reported by vendors.

- Negative News - Media coverage that may indicate reputational, operational, or financial risks.

- Security Breaches - Media coverage of security breaches or incidents.

- SEC Filing Risk Factors - Regulatory filings that highlight potential business risks and compliance issues.

How to Create AI Risk Rules

-

Access Rule Configuration: Navigate to the Risk tab and click the Configure AI Triaging button to access the rule management interface.

-

Define Rule Parameters:

- Name: Provide a descriptive name for the rule (e.g., "Medium Risk - Data Availability")

- Event Types: Choose which event types this rule should apply to

- Vendor Scope: Specify if the rule applies to all vendors or specific ones

- SLA Scope: For incidents, you can further filter rules to apply to incidents from particular SLAs. See SLA configuration for more details about SLA-based incident filtering.

-

Configure Rule Logic: Write clear instructions that describe when events should be classified with this rule:

- Be specific about the conditions that trigger the classification

- Include the desired risk level classification

- Consider operational impact, data sensitivity, and business criticality

-

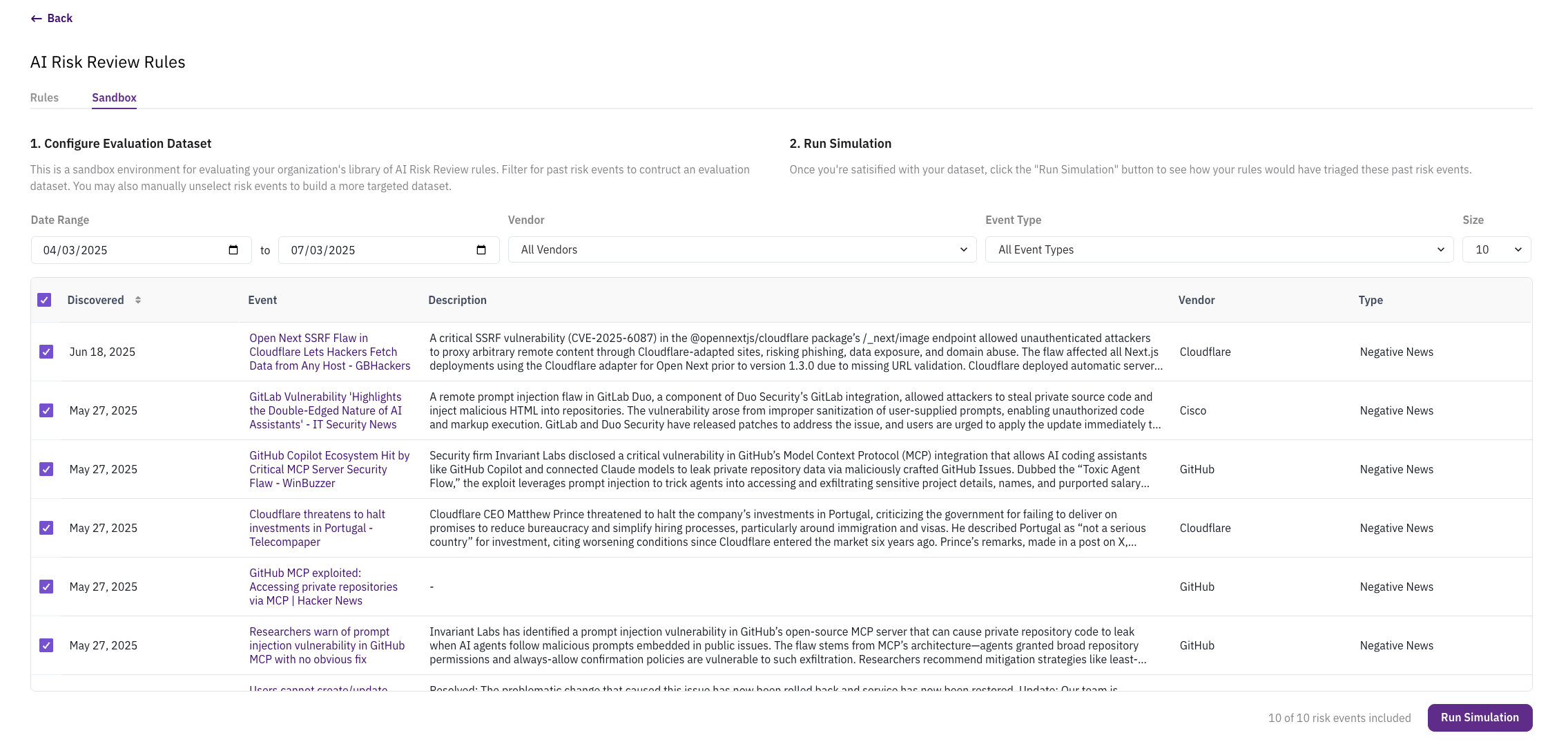

Test and Validate: Use the Sandbox environment to test your rules against historical risk events:

- Filter past events by date range and vendor

- Run simulations to see how your rules would classify existing events

- Review results and refine rule logic as needed

- Start Broad, Then Narrow: Begin with general rules and add more specific ones as you identify patterns in your risk events.

- Clear Instructions: Write rule instructions that are specific and actionable to ensure consistent AI classification.

- Avoid Conflicting Rules: When multiple rules can apply to the same incident, avoid conflicting rules.

- Test Thoroughly: Always test rules in the Sandbox before deploying to production to avoid misclassification of critical events.

Rule Testing and Optimization

The Sandbox environment allows you to validate rule effectiveness before deploying them:

-

Configure Evaluation Dataset: Set date ranges and vendor filters to create a representative sample of past risk events.

-

Run Simulation: Execute your rules against the evaluation dataset to see classification results.

-

Review Results: Analyze how events are classified and identify any misclassifications or gaps in coverage.

-

Iterate and Improve: Refine rule instructions based on simulation results to improve accuracy.

Need Help?

Contact support at support@clarative.ai for assistance with configuring AI risk rules or optimizing your risk classification strategy.